Slower/erratic model performance with longer timesteps

Added by Jan Streffing over 3 years ago

Using AWI-CM3 on levante I found the following counterintuitive behavior:

Using the following timesteps:

fesom:

time_step: 1800

nproc: 384

oifs:

time_step: 3600

nproc: 384

omp_num_threads: 8

oasis3mct:

time_step: 3600

Simulating one month takes 88 seconds

When using:

fesom:

time_step: 2400

nproc: 384

oifs:

time_step: 3600

nproc: 384

omp_num_threads: 8

oasis3mct:

time_step: 7200

Simulating one month takes 116 seconds.

Using the lucia coupled model load balancing tool, I can see that the fesom2 performance does indeed become faster, as expected due to the reduced number of steps (calculations from 71 to 62s):

1800s on levante:

Component - Calculations - Waiting time (s) - # cpl step : fesom 71.07 7.30 741 oifs 73.33 0.80 741 rnfma 22.53 51.61 741 xios.x 0.00 0.00 0

2400s on levante:

Component - Calculations - Waiting time (s) - # cpl step : fesom 62.03 43.35 369 oifs 99.81 0.62 369 rnfma 11.21 90.81 369 xios.x 0.00 0.00 0

As you can see, the atmospheric model OpenIFS calculation time inexplicably become longer, though. I have not changed the OpenIFS timestep, and it does not make sense why coupling half as often should make the calculation time longer, let alone by such a substantial amount.

For comparison I have repeated the experiments on juwels. Here the 1800s fesom and coupling twice as often takes longer, as I would expect.

1800s on juwels:

Component - Calculations - Waiting time (s) - # cpl step : fesom 57.16 50.01 741 oifs 91.80 0.47 741 rnfma 18.19 74.08 741 xios.x 0.00 0.00 0

2400s on juwels:

Component - Calculations - Waiting time (s) - # cpl step : fesom 43.23 54.13 369 oifs 78.13 0.48 369 rnfma 9.10 72.11 369 xios.x 0.00 0.00 0

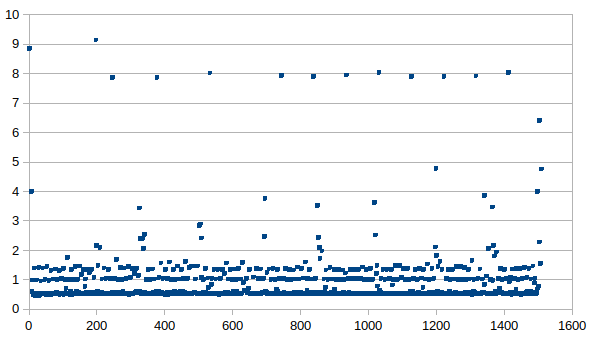

A closer look at the length of individual timesteps on levante reveals, that some timesteps seem to have a sort of hiccup:

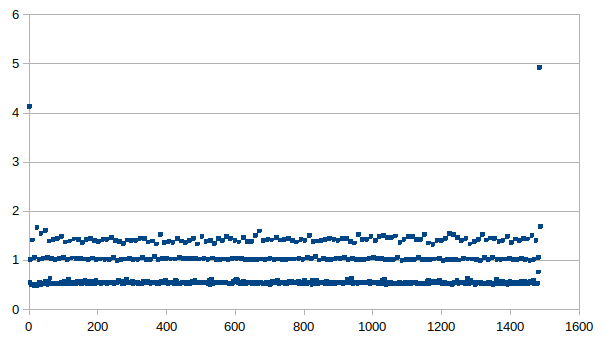

in comparison to the 1800s run on levante:

Some of this behavior is regular, such as the 8s long timesteps in the latter 2/3 of the run (e.g. steps 408,504,600,696, so every 4 days). Other parts look random with an increasing trend (timesteps in between 2-6s). Could this be some kind of MPI issue? I repeated the experiment 3 times, and it does not seem to be due to specific nodes.

You can find two runs here:

/work/ab0246/a270092/runtime/awicm3-v3.1/v3.1_speedtest_1800s/

/work/ab0246/a270092/runtime/awicm3-v3.1/v3.1_speedtest_2400s/

The relevant logfiles are:

/work/ab0246/a270092/runtime/awicm3-v3.1/v3.1_speedtest_2400s/log/v3.1_speedtest_same_as_before_xios_2_awicm3_observe_compute_20000101-20000131.log

/work/ab0246/a270092/runtime/awicm3-v3.1/v3.1_speedtest_2400s/work/ab0246/a270092/runtime/awicm3-v3.1/v3.1_speedtest_2400s/run_20000101-20000131/work/ifs.stat

Is this something you have seen in other models? How to track the origin of the issue down? I already tested reducing the OpenIFS MPI 384->256 and OMP 8->6, but still saw the same behavior.

Replies (1)

RE: Slower/erratic model performance with longer timesteps - Added by Jan Streffing over 3 years ago

I did one more test run on this. This time I changed only one variable; The coupling timestep. The FESOM2 timestep was 1800s throughout. The result: Coupling every two hours is significantly slower than coupling every hour, as shown above.